C++ Core Guidelines: Sharing Data between Threads

If you want to have fun with threads, you should share mutable data between them. To get no data race and, therefore, undefined behavior, you have to think about the synchronization of your threads.

The three rules in this post may be quite obvious for the experienced multithreading developer but crucial for the novice in the multithreading domain. Here are they:

- CP.20: Use RAII, never plain

lock()/unlock() - CP.21: Use

std::lock()orstd::scoped_lockto acquire multiplemutexes - CP.22: Never call unknown code while holding a lock (e.g., a callback)

Let’s start with the most obvious rule.

CP.20: Use RAII, never plain lock()/unlock()

No naked mutex! Put your mutex always in a lock. The lock will automatically release (unlock) the mutex if it goes out of scope. RAII stands for Resource Acquisition Is Initialization and means that you bind a resource’s lifetime to a local variable’s lifetime. C++ automatically manages the lifetime of locals.

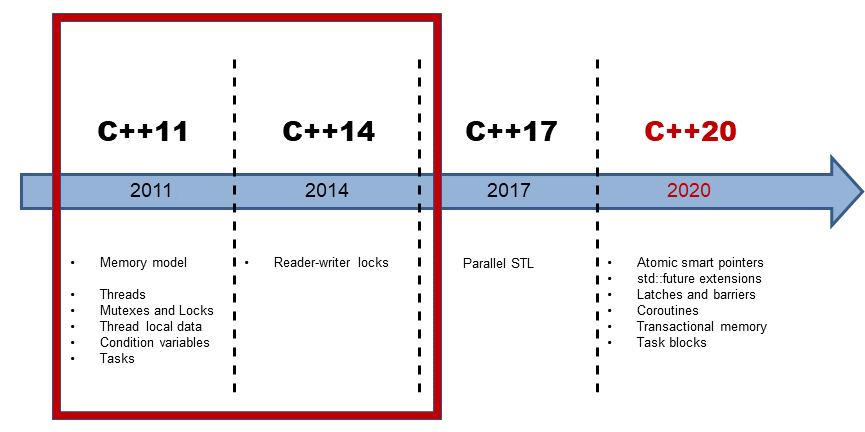

std::lock_guard, std::unique_lock, std::shared_lock (C++14), or std::std::scoped_lock (C++17) implement this pattern but also the smart pointers std::unique_ptr, and std::shared_ptr. My previous post Garbage Collection – No Thanks explains the details to RAII.

What does this mean for your multithreading code?

std::mutex mtx; void do_stuff() { mtx.lock(); // ... do stuff ... (1) mtx.unlock(); }

It doesn’t matter if an exception occurs in (1) or you just forgot to unlock the mtx; in both cases, you will get a deadlock if another thread wants to acquire (lock) the std::mutex mtx. The rescue is quite apparent.

Modernes C++ Mentoring

Modernes C++ Mentoring

Do you want to stay informed: Subscribe.

std::mutex mtx; void do_stuff() { std::lock_guard<std::mutex> lck {mtx}; // ... do stuff ... } // (1)

Put the mutex into a lock, and the mutex will automatically be unlocked at (1) because the lck goes out of scope.

CP.21: Use std::lock() or std::scoped_lock to acquire multiple mutexes

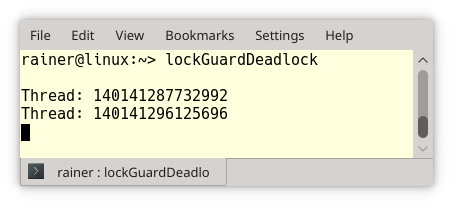

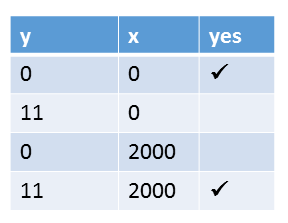

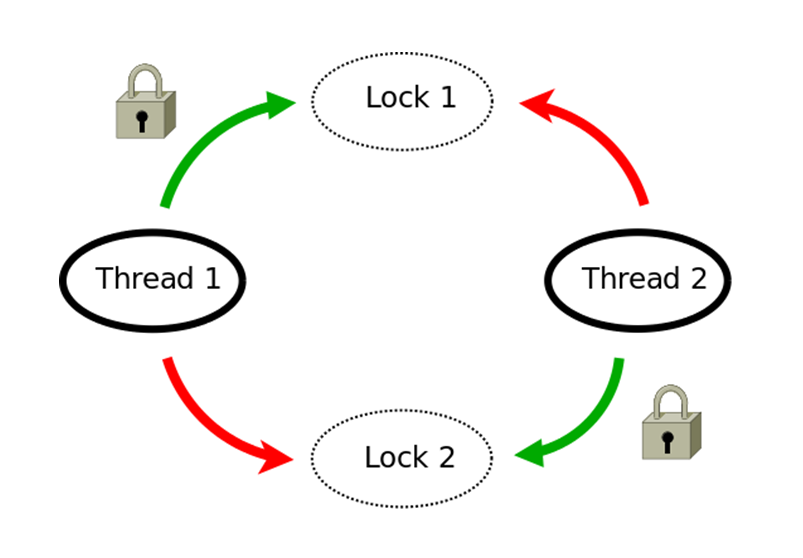

If a thread needs more than one mutex, you must be extremely careful that you lock the mutexes in the same sequence. If not, a bad interleaving of threads may cause a deadlock. The following program causes a deadlock.

// lockGuardDeadlock.cpp #include <iostream> #include <chrono> #include <mutex> #include <thread> struct CriticalData{ std::mutex mut; }; void deadLock(CriticalData& a, CriticalData& b){ std::lock_guard<std::mutex>guard1(a.mut); // (2) std::cout << "Thread: " << std::this_thread::get_id() << std::endl; std::this_thread::sleep_for(std::chrono::milliseconds(1)); std::lock_guard<std::mutex>guard2(b.mut); // (2) std::cout << "Thread: " << std::this_thread::get_id() << std::endl; // do something with a and b (critical region) (3) } int main(){ std::cout << std::endl; CriticalData c1; CriticalData c2; std::thread t1([&]{deadLock(c1, c2);}); // (1) std::thread t2([&]{deadLock(c2, c1);}); // (1) t1.join(); t2.join(); std::cout << std::endl; }

Threads t1 and t2 need two resources CriticalData, to perform their job (3). CriticalData has its own mutex mut to synchronize the access. Unfortunately, both invoke the function deadlock with the arguments c1 and c2 in a different sequence (1). Now we have a race condition. If thread t1 can lock the first mutex a.mut but not the second one b.mut because, in the meantime, thread t2 locks the second one, we will get a deadlock (2).

The easiest way to solve the deadlock is to lock both mutexes atomically.

With C++11, you can use a std::unique_lock together with std::lock. std::unique_lock, you can defer the locking of its mutex. The function std::lock, which can lock an arbitrary number of mutexes in an atomic way, does the locking finally.

void deadLock(CriticalData& a, CriticalData& b){ std::unique_lock<mutex> guard1(a.mut, std::defer_lock); std::unique_lock<mutex> guard2(b.mut, std::defer_lock); std::lock(guard1, guard2); // do something with a and b (critical region) }

With C++17, a std::scoped_lock can lock an arbitrary number of mutex in one atomic operation.

void deadLock(CriticalData& a, CriticalData& b){ std::scoped_lock(a.mut, b.mut); // do something with a and b (critical region }

CP.22: Never call unknown code while holding a lock (e.g., a callback)

Why is this code snippet bad?

std::mutex m;

{ std::lock_guard<std::mutex> lockGuard(m); sharedVariable = unknownFunction(); }

I can only speculate about the unknownFunction. If unknownFunction

- tries to lock the mutex m, that will be undefined behavior. Most of the time, you will get a deadlock.

- starts a new thread that tries to lock the mutex m, you will get a deadlock.

- locks another mutex m2, you may get a deadlock because you lock the two mutexes m and m2 simultaneously. Now another thread may lock the same mutexes in a different sequence.

- will not directly or indirectly try to lock the mutex m; all seems fine. “Seems” because your coworker can modify the function, or the function is dynamically linked, and you get a different version. All bets are open to what may happen.

- work as expected, you may have a performance problem because you don’t know how long the function unknownFunction would take. What is meant to be a multithreaded program may become a single-threaded program.

To solve these issues, use a local variable:

std::mutex m;

auto tempVar = unknownFunction(); { std::lock_guard<std::mutex> lockGuard(m); sharedVariable = tempVar; }

This additional indirection solves all issues. tempVar is a local variable and can not be a victim of a data race. This means that you can invoke unknownFunction without a synchronization mechanism. Additionally, the time for holding a lock is reduced to its bare minimum: assigning the value of tempVar to sharedVariable.

What’s next?

If you don’t call join or detach on your created thread child, the child will throw a std::terminate exception in its destructor. std::terminatecalls per default std::abort. To overcome this issue, the guidelines support library has a gsl::joining_thread which calls join at the end of its scope. I will have a closer look at gsl::joining_thread in my next post.

Thanks a lot to my Patreon Supporters: Matt Braun, Roman Postanciuc, Tobias Zindl, G Prvulovic, Reinhold Dröge, Abernitzke, Frank Grimm, Sakib, Broeserl, António Pina, Sergey Agafyin, Андрей Бурмистров, Jake, GS, Lawton Shoemake, Jozo Leko, John Breland, Venkat Nandam, Jose Francisco, Douglas Tinkham, Kuchlong Kuchlong, Robert Blanch, Truels Wissneth, Mario Luoni, Friedrich Huber, lennonli, Pramod Tikare Muralidhara, Peter Ware, Daniel Hufschläger, Alessandro Pezzato, Bob Perry, Satish Vangipuram, Andi Ireland, Richard Ohnemus, Michael Dunsky, Leo Goodstadt, John Wiederhirn, Yacob Cohen-Arazi, Florian Tischler, Robin Furness, Michael Young, Holger Detering, Bernd Mühlhaus, Stephen Kelley, Kyle Dean, Tusar Palauri, Juan Dent, George Liao, Daniel Ceperley, Jon T Hess, Stephen Totten, Wolfgang Fütterer, Matthias Grün, Ben Atakora, Ann Shatoff, Rob North, Bhavith C Achar, Marco Parri Empoli, Philipp Lenk, Charles-Jianye Chen, Keith Jeffery, Matt Godbolt, Honey Sukesan, bruce_lee_wayne, Silviu Ardelean, schnapper79, Seeker, and Sundareswaran Senthilvel.

Thanks, in particular, to Jon Hess, Lakshman, Christian Wittenhorst, Sherhy Pyton, Dendi Suhubdy, Sudhakar Belagurusamy, Richard Sargeant, Rusty Fleming, John Nebel, Mipko, Alicja Kaminska, Slavko Radman, and David Poole.

| My special thanks to Embarcadero |  |

| My special thanks to PVS-Studio |  |

| My special thanks to Tipi.build |  |

| My special thanks to Take Up Code |  |

| My special thanks to SHAVEDYAKS |  |

Modernes C++ GmbH

Modernes C++ Mentoring (English)

Rainer Grimm

Yalovastraße 20

72108 Rottenburg

Mail: schulung@ModernesCpp.de

Mentoring: www.ModernesCpp.org

Leave a Reply

Want to join the discussion?Feel free to contribute!