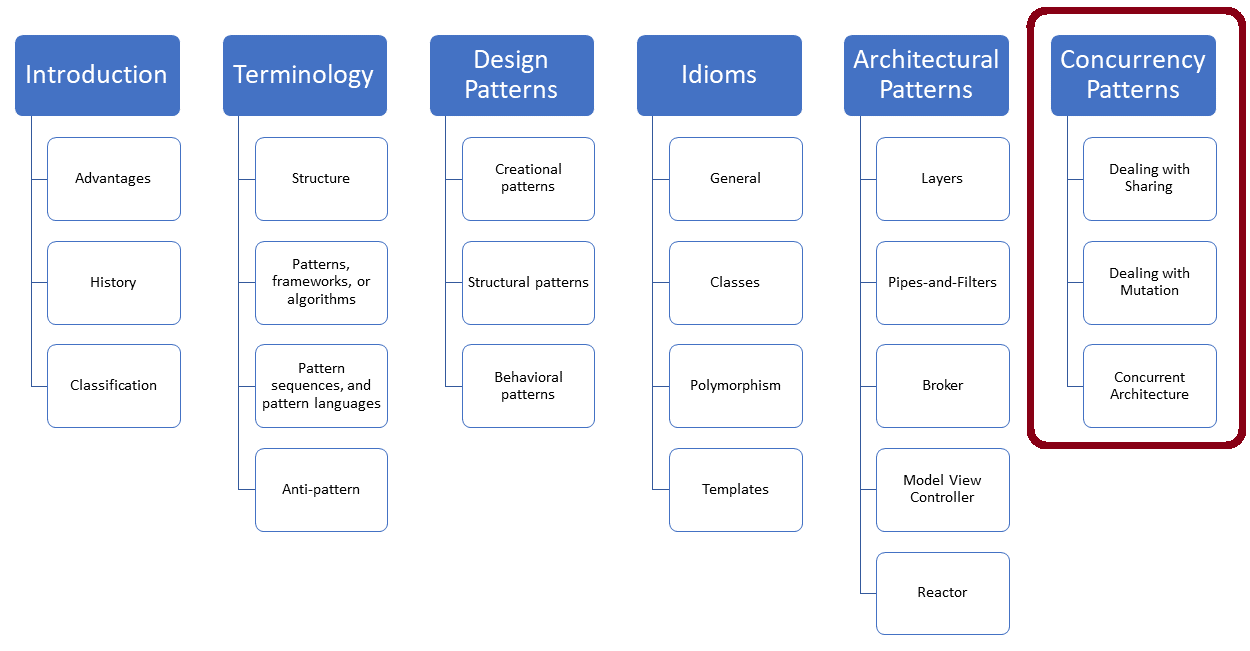

Concurrency Patterns

There are many well-established patterns used in the concurrency domain. They deal with synchronization challenges such as sharing and mutation but also with concurrent architectures. Today, I will introduce and dive deeper into them in additional posts.

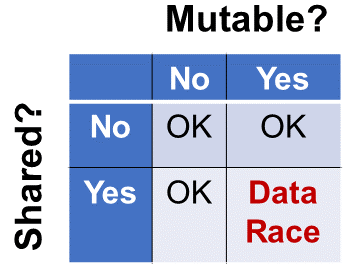

The main concern when you deal with concurrency is shared, mutable state or, as Tony Van Eerd put it in his CppCon 2014 talk “Lock-free by Example”: “Forget what you learned in Kindergarten (ie stop Sharing)”. A crucial term for concurrency is a data race. Let me first define this term.

- Data race: A data race is when at least two threads access a shared variable simultaneously. At least one thread tries to modify the variable. If your program has a data race, it has undefined behavior. This means all outcomes are possible, so reasoning about the program makes no sense anymore.

A necessary condition for a data race is a mutable, shared state. If you handle sharing or mutation, no data race can happen. This is precisely the focus of the synchronization patterns. Classics such as Active and Monitor Object also address the concurrent architecture.

Synchronization Patterns

The focus of the synchronization patterns is to deal with sharing and mutation.

Dealing with Sharing

If you don’t share, no data races can happen. Not sharing means that your thread works on local variables. This can be achieved by a Copied Value, using Thread-Specific storage, or transferring the result of a thread to its associated Future via a protected data channel.

Copied Value

If a thread gets its arguments by copy and not by reference, there is no need to synchronize access to any data. No data races and no lifetime issues are possible.

Thread-Specific Storage

Thread-specific or thread-local storage allows multiple threads to use local storage via a global access point. By using the storage specifier thread_local, a variable becomes a thread-local variable. This means you can use the thread-local variable without synchronization.

Modernes C++ Mentoring

Modernes C++ Mentoring

Do you want to stay informed: Subscribe.

Futures

C++11 provides futures and promises in three flavors: std::async, std::packaged_task, and the pair std::promise and std::future. The future is a read-only placeholder for the value that a promise sets. From the synchronization perspective, a promise/future pair’s critical property is that a protected data channel connects both.

Dealing with Mutation

No data race can happen if you don’t write and read data concurrently. First, protect the critical sections with a lock such as a Scoped or Strategized Locking. In object-oriented design, a critical section is typically an object, including its interface. The Thread-Safe Interface protects the entire object. The idea of the Guarded Suspension is to send a signal when it its done with its work.

Scoped Locking

Scoped locking is the idea of RAII applied to a mutex. The key idea of this idiom is to bind the resource acquisition and release to an object’s lifetime. As the name suggests, the lifetime of the object is scoped. Scoped means that the C++ run time is responsible for object destruction and, therefore, for releasing the resource.

Strategized Locking

Strategized locking is the idea of the strategy pattern applied to locking. This means putting your locking strategy into an object and making it into a pluggable component of your system.

Thread-Safe Interface

The Thread-Safe Interface fits very well when the critical sections are just objects. The naive idea to protect all member functions with a lock causes, in the best case, a performance issue and, in the worst case, a deadlock.

The thread-safe interface overcomes both issues. Here is the straightforward idea:

- All interface member functions (public) should use a lock.

- All implementation member functions (protected and private) must not use a lock.

- The interface member functions call only protected or private member functions but no public member functions.

Guarded Suspension

The guarded suspension basic variant combines a lock and a precondition that must be satisfied. If the precondition is not fulfilled, that calling thread puts itself to sleep. The checking thread uses a lock to avoid a race condition that may result in a data race or a deadlock.

Various variants exist:

- The waiting thread can passively be notified about the state change or actively ask for the state

change. - The waiting can be done with or without a time boundary.

- The notification can be sent to one or all waiting threads.

Concurrent Architecture

The Active Object and Monitor Object synchronize and schedule member function invocation. The main difference is that the Active Object executes its member function in a different thread, but the Monitor Object is in the same thread as the client.

Active Object

The active object design pattern decouples method execution from method invocation for objects that each reside in their own thread of control. The goal is to introduce concurrency, by using asynchronous method invocation and a scheduler for handling requests. (Wikipedia:Active Object)

Monitor Object

The monitor object design pattern synchronizes concurrent member function execution to ensure that only one member function at a time runs within an object. It also allows object’s member functions to schedule their execution sequences cooperatively. (Pattern-Oriented Software Architecture: Patterns for Concurrent and Networked Objects)

What’s Next?

In my next post, I will dive into the synchronization pattern and write, particularly, about the concurrency patterns dealing with sharing.

Thanks a lot to my Patreon Supporters: Matt Braun, Roman Postanciuc, Tobias Zindl, G Prvulovic, Reinhold Dröge, Abernitzke, Frank Grimm, Sakib, Broeserl, António Pina, Sergey Agafyin, Андрей Бурмистров, Jake, GS, Lawton Shoemake, Jozo Leko, John Breland, Venkat Nandam, Jose Francisco, Douglas Tinkham, Kuchlong Kuchlong, Robert Blanch, Truels Wissneth, Mario Luoni, Friedrich Huber, lennonli, Pramod Tikare Muralidhara, Peter Ware, Daniel Hufschläger, Alessandro Pezzato, Bob Perry, Satish Vangipuram, Andi Ireland, Richard Ohnemus, Michael Dunsky, Leo Goodstadt, John Wiederhirn, Yacob Cohen-Arazi, Florian Tischler, Robin Furness, Michael Young, Holger Detering, Bernd Mühlhaus, Stephen Kelley, Kyle Dean, Tusar Palauri, Juan Dent, George Liao, Daniel Ceperley, Jon T Hess, Stephen Totten, Wolfgang Fütterer, Matthias Grün, Phillip Diekmann, Ben Atakora, Ann Shatoff, Rob North, Bhavith C Achar, Marco Parri Empoli, Philipp Lenk, Charles-Jianye Chen, Keith Jeffery, Matt Godbolt, and Honey Sukesan.

Thanks, in particular, to Jon Hess, Lakshman, Christian Wittenhorst, Sherhy Pyton, Dendi Suhubdy, Sudhakar Belagurusamy, Richard Sargeant, Rusty Fleming, John Nebel, Mipko, Alicja Kaminska, Slavko Radman, and David Poole.

| My special thanks to Embarcadero |  |

| My special thanks to PVS-Studio |  |

| My special thanks to Tipi.build |  |

| My special thanks to Take Up Code |  |

| My special thanks to SHAVEDYAKS |  |

Modernes C++ GmbH

Modernes C++ Mentoring (English)

Rainer Grimm

Yalovastraße 20

72108 Rottenburg

Mail: schulung@ModernesCpp.de

Mentoring: www.ModernesCpp.org

Modernes C++ Mentoring,

Leave a Reply

Want to join the discussion?Feel free to contribute!