C++ Core Guidelines: Use Tools to Validate your Concurrent Code

Today, I’m happy to write about the probably most important C++ Core Guidelines for concurrency rule: Whenever feasible, use tools to validate your concurrent code. Not all, but many bugs can be detected with tools, and each fixed bug is a good bug. Here are two tools that gave me precious help in the last few years: ThreadSanitizer and CppMem.

Here is the rule for today:

CP.9: Whenever feasible use tools to validate your concurrent code

This post is based on personal experience. On the one hand, a lot of my students in my multithreading classes wrote programs with data race; on the other hand, I implemented a lot of multithreading programs with bugs. How can I be sure? Because of the dynamic code analysis tool ThreadSanitizer and the static code analysis tool CppMem. The use cases for ThreadSanitizer and CppMem are different.

ThreadSanitizer gives you the big picture and detects if the execution of your program has a data race. CppMem gives you a detailed insight into small pieces of code, most of the time including atomics. You will get the answer to the question: Which interleavings are possible according to the memory model?

Let’s start with ThreadSanitizer.

ThreadSanitizer

Here is the official introduction to ThreadSanitizer: “ThreadSanitizer (aka TSan) is a data race detector for C/C++. Data races are one of the most common and hardest-to-debug types of bugs in concurrent systems. A data race occurs when two threads access the same variable concurrently, and at least one access is written. C++11 standard officially bans data races as undefined behavior.”

ThreadSanitizer is part of clang 3.2 and gcc 4.8; it supports Linux x86_64, and is tested on Ubuntu 12.04. You have to compile and link against -fsanitize=thread, use at least optimization level -O2 and the flag -g for producing debugging information: -fsanitize=thread -O2 -g.

Modernes C++ Mentoring

Modernes C++ Mentoring

Do you want to stay informed: Subscribe.

The runtime overhead is significant: the memory usage may increase by 5-10x and the execution time by 2-20x. But of course, you know the principal law of software development: First, your program should be correct than fast.

The ping-pong game

Now, let’s see ThreadSanitizer in action. Here is a typical exercise I often have given in my multithreading classes to condition variables:

Write a small ping pong game.

- Two threads should alternatively set a bool value to true or false. One thread sets the value to true and notifies the other thread. The other thread sets the value to false and notifies the original thread. That play should end after a fixed amount of iterations.

And this is the typical implementation my students came up with.

// conditionVariablePingPong.cpp #include <condition_variable> #include <iostream> #include <thread> bool dataReady= false; // (3) std::mutex mutex_; std::condition_variable condVar1; std::condition_variable condVar2; int counter=0; int COUNTLIMIT=50; void setTrue(){ // (1) while(counter <= COUNTLIMIT){ // (7) std::unique_lock<std::mutex> lck(mutex_); condVar1.wait(lck, []{return dataReady == false;}); // (4) dataReady= true; ++counter; // (5) std::cout << dataReady << std::endl; condVar2.notify_one(); // (6) } } void setFalse(){ // (2) while(counter < COUNTLIMIT){ // (8) std::unique_lock<std::mutex> lck(mutex_); condVar2.wait(lck, []{return dataReady == true;}); dataReady= false; std::cout << dataReady << std::endl; condVar1.notify_one(); } } int main(){ std::cout << std::boolalpha << std::endl; std::cout << "Begin: " << dataReady << std::endl; std::thread t1(setTrue); std::thread t2(setFalse); t1.join(); t2.join(); dataReady= false; std::cout << "End: " << dataReady << std::endl; std::cout << std::endl; }

Function setTrue (1) sets the boolean value dataReady (3) to true and function setFalse (2) sets it to false. The play starts with setTrue. The condition variable in the function waits for the notification and therefore checks first the boolean dataReady (4). Afterward, the function increments the counter (5) and notifies the other thread with the help of the condition variable condVar2 (6). The function setFalse follows the same workflow. If counter becomes equal to COUNTLIMIT (7), the game ends. Fine? NO!

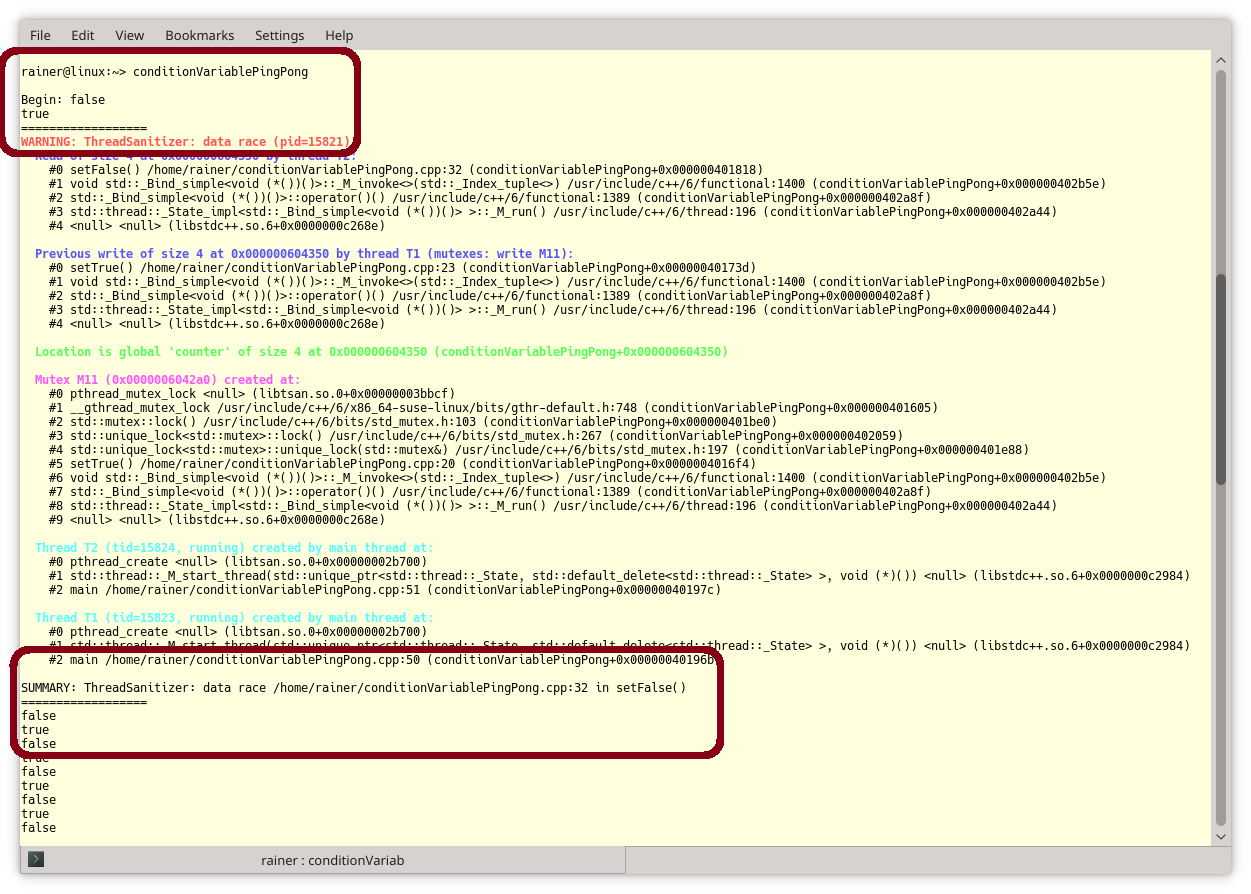

There is a data race on the counter. It is read (8) and written (5) and the same time. Thanks to ThreadSanitizer. Here is the proof.

ThreadSanitzer detects data races during runtime, CppMem lets you analyze small code snippets.

CppMem

I will only provide a short overview of CppMem.

The online tool, which you can also install on your CPP provides very precious services.

- CppMem validates small code snippets, typically including atomics.

- The very accurate analysis of CppMem gives you a deep insight into the C++ memory model.

For deeper insight, I have already written a few posts to CppMem. For now, I will only refer to the first point and provide your with a 10000 feet view overview.

A Short Overview

My simplified overview uses the default configuration of the tool. This overview should give you the base for further experiments.

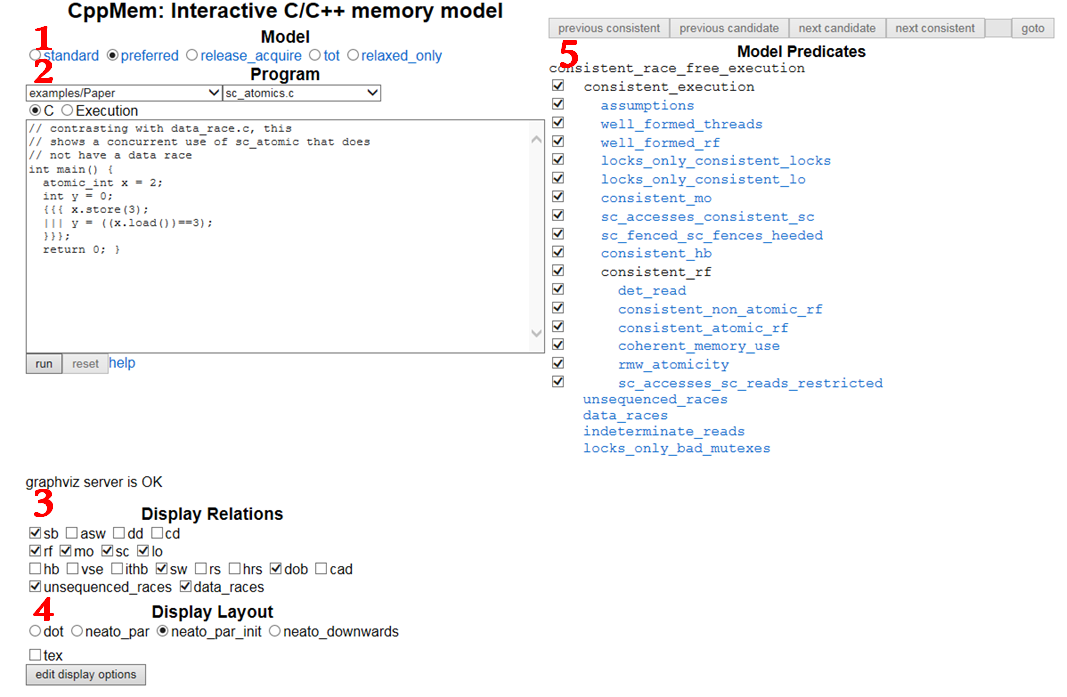

I will refer to the red numbers in the following screenshot for simplicity reasons.

- Model

- Specifies the C++ memory model. preferred is the C++ memory model.

- Program

- Is the executable program in C or C++-like syntax?

- CppMem offers a bunch of typical atomic workflow. To get the details of these programs, read the well-written article Mathematizing C++ Concurrency. Of course, you can also use your own code.

- CppMem is about multithreading, so there are a few simplifications

- You can easily define two threads by the symbols {{{ … ||| … }}}. The three dots (…) stand for the work package of the thread.

- Display Relations

- Describes the relations between the read, write, and read-write modifications on atomic operations, fences, and locks.

- If the relation is enabled, it will be displayed in the annotated graph (see point 6).

- Here are a few relations:

- sb: sequenced-before

- rf: read from

- mo: modification order

- sc: sequentially consistent

- lo: lock order

- sw: synchronizes-with

- dob: dependency-ordered-before

- data_races

- Here are a few relations:

- Display Layout

- You can choose with this switch which Doxygraph graph is used.

- Choose the Executions

- Switch between the various consistent executions.

- Annotated Graph

- Displays the annotated graph.

Now, let’s try it out.

A Data Race

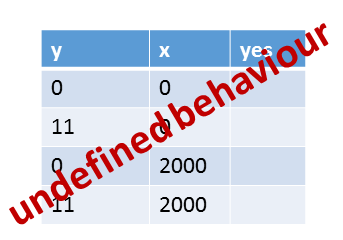

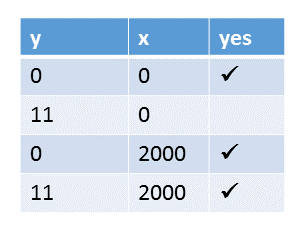

First, my program with a data race on x (1). The atomic y is fine. Independent of the applied memory-order, the operations on y are at least atomic.

// dataRaceOnX.cpp #include <atomic> #include <iostream> #include <thread> int x = 0; std::atomic<int> y{0}; void writing(){ x = 2000; // (1) y.store(11, std::memory_order_release); // (2) } void reading(){ std::cout << y.load(std::memory_order_acquire) << " "; // (2) std::cout << x << std::endl; // (1) } int main(){ std::thread thread1(writing); std::thread thread2(reading); thread1.join(); thread2.join(); }

Here is the equivalent program in the simplified CppMem syntax.

// dataRaceOnXCppMem.txt int main(){ int x = 0; atomic_int y = 0; {{{ { x = 2000; y.store(11, memory_order_release); } ||| { y.load(memory_order_acquire); x; } }}} }

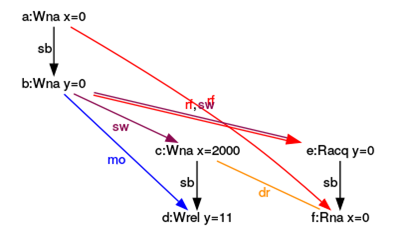

CppMem shows it immediately. The first consistent execution has a data race on x.

You can observe the data race in the graph. It is the yellow edge (dr) between the write (x=2000) and the read operation (x=0).

What’s next?

Of course, there are many more rules to concurrency in the C++ Core Guidelines. The next post will be about locks and mutexes.

Thanks a lot to my Patreon Supporters: Matt Braun, Roman Postanciuc, Tobias Zindl, G Prvulovic, Reinhold Dröge, Abernitzke, Frank Grimm, Sakib, Broeserl, António Pina, Sergey Agafyin, Андрей Бурмистров, Jake, GS, Lawton Shoemake, Jozo Leko, John Breland, Venkat Nandam, Jose Francisco, Douglas Tinkham, Kuchlong Kuchlong, Robert Blanch, Truels Wissneth, Mario Luoni, Friedrich Huber, lennonli, Pramod Tikare Muralidhara, Peter Ware, Daniel Hufschläger, Alessandro Pezzato, Bob Perry, Satish Vangipuram, Andi Ireland, Richard Ohnemus, Michael Dunsky, Leo Goodstadt, John Wiederhirn, Yacob Cohen-Arazi, Florian Tischler, Robin Furness, Michael Young, Holger Detering, Bernd Mühlhaus, Stephen Kelley, Kyle Dean, Tusar Palauri, Juan Dent, George Liao, Daniel Ceperley, Jon T Hess, Stephen Totten, Wolfgang Fütterer, Matthias Grün, Ben Atakora, Ann Shatoff, Rob North, Bhavith C Achar, Marco Parri Empoli, Philipp Lenk, Charles-Jianye Chen, Keith Jeffery, Matt Godbolt, Honey Sukesan, bruce_lee_wayne, Silviu Ardelean, schnapper79, Seeker, and Sundareswaran Senthilvel.

Thanks, in particular, to Jon Hess, Lakshman, Christian Wittenhorst, Sherhy Pyton, Dendi Suhubdy, Sudhakar Belagurusamy, Richard Sargeant, Rusty Fleming, John Nebel, Mipko, Alicja Kaminska, Slavko Radman, and David Poole.

| My special thanks to Embarcadero |  |

| My special thanks to PVS-Studio |  |

| My special thanks to Tipi.build |  |

| My special thanks to Take Up Code |  |

| My special thanks to SHAVEDYAKS |  |

Modernes C++ GmbH

Modernes C++ Mentoring (English)

Rainer Grimm

Yalovastraße 20

72108 Rottenburg

Mail: schulung@ModernesCpp.de

Mentoring: www.ModernesCpp.org

Leave a Reply

Want to join the discussion?Feel free to contribute!